By heissanjay · Published 1/18/2026

Evaluation-Driven ML Releases

A release workflow that treats eval quality gates as first-class deployment checks.

1 min read

Production ML releases should pass quality gates the same way services pass CI checks.

Why eval gates matter

- They catch silent regressions before users do.

- They force teams to define measurable quality targets.

- They make rollback decisions objective.

Release checklist

- Run offline benchmark suite.

- Compare against current production baseline.

- Validate latency and cost budgets.

- Gate deployment if metrics violate thresholds.

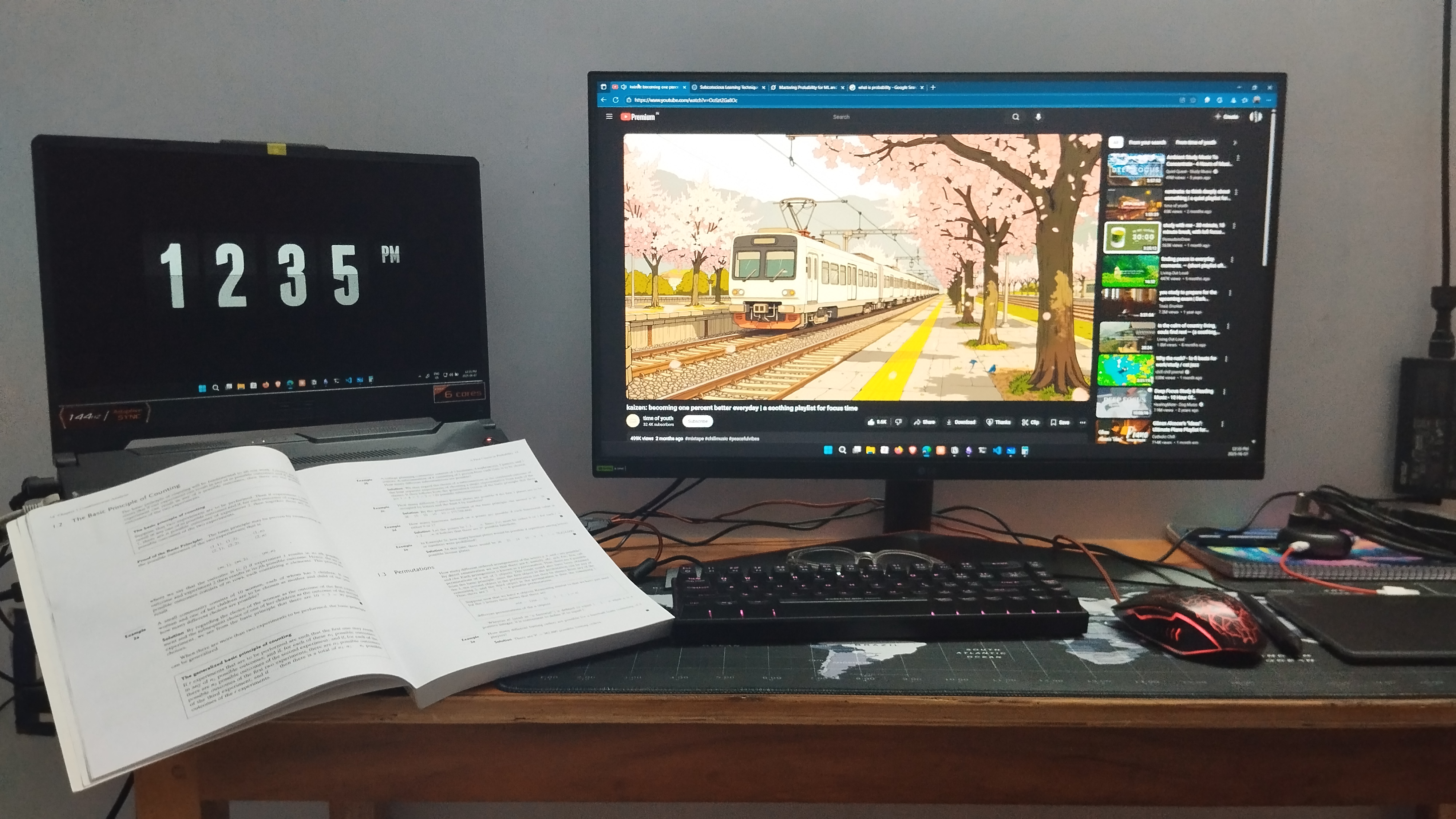

My Gear

Deep dive

How we define gate thresholds

Thresholds combine absolute quality floors and relative regression limits.

Example: block release if factuality drops more than 2% or latency increases more than 15%.